The Charm and Harm of Deepfake

The term deepfake has a negative association like fake news or fake media. There is an important difference. You don't need AI to create fake news. Everyone can just make something up and spread false information verbally, as graffiti, or with pen and paper.

Deepfake tools are available to everyone and will be very easy to use. More importantly, fake news is bad while deepfake can do good and bad things. This makes deepfake harder to categorize.

AI based optimization

Video and virtualization are becoming one. Think about something simple like virtual backgrounds in conference calls. Microsoft, Zoom and others improve quality of their virtual backgrounds based on machine learning. This turns a normal video feed from a webcam into a partially virtualized video to offer a new experience. The original video has been manipulated by AI. It's fake video in a sense but a good one that everyone is aware about and even enjoys. Obviously, those video effects are okay but not realistic enough (in 2021) to fool people.

The competition of the best AI methods in video conferencing has only just begun. New players like «Headroom» enter the market with more AI features. We will be seeing more and more AI including deepfake coming to your conference calls.

More AI in Headroom video conferencing

What if those video effects become so good and persuading that it will no longer be possible to distinguish real video from virtualized realities. Do we want to be notified about such manipulations or do we accept being deceived?

The trend of AI based video communication is irreversible. We still need a camera but not even a good one. Deep learning based methods for «Video Super-Resolution» and «Real-Time Super-Resolution» turn low-quality video into superb high-resolution quality. The processing doesn't happen locally on the enduser device. Low-quality video is transmitted to the cloud where video optimization happens in real-time. High-quality video gets then distributed to all attendees without a noticeable delay.

Video Super-Resolution optimizes low-quality video

Internet connections often provide more download than upload bandwidth while the upstream for video conferencing or live streaming requires more bandwidth than the same video as downstream. Optimizing a low-quality and low-bandwidth upstream in the cloud will therefore also offload the presenter's upstream connection. Such optimization from low-quality to high-quality video is based on deep learning. Real video is still the source while the result is synthetic and therefore partly virtualized. It's very similar to deepfake.

Metaverse

The next level of synthetic video and fully virtualized communication is what prominent providers such as Microsoft and Meta promote under the buzzword «Metaverse». We already entered the Metaverse. Even virtual backgrounds in video calls qualify as parts of the Metaverse. What makes the vision about the Metaverse complete is a fully virtualized experiment with virtual avatars in a virtual environment.

Both 3D renderings and video will require additional AI based optimization to improve the virtual experience. This will be some sorts of deepfakes applied on the user's upstreams in data centers. Deepfake will inevitably be a fixed component of the Metaverse.

Deepfake vs. 3D

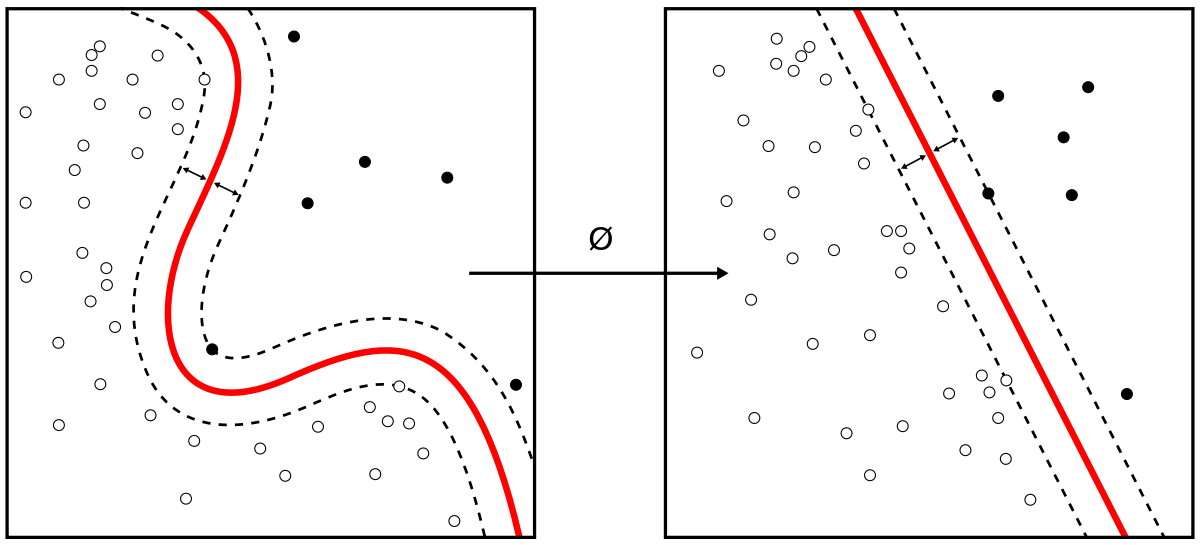

It will be interesting to see if 3D real-time animation keeps improving or if deepfake can outperform today's 3D technologies. The progress of «Generative Adversarial Networks» (GANs) and «Neural Radiance Fields» (NeRFs) looks promising.

Deepfakes can be created with the help of GANs

GANs and NeRFs take input data and generate interpolated output data with the support of machine and deep learning. This even allows the creation of volumetric scenes with deepfake entering the 3D space. Deepfake has already been recognized to produce more realistic results than 3D animation standards in leading Hollywood productions.

Deepfake created better results than Hollywood CGI

A New Reality

Video cameras will still be leveraged to capture motion and gestures but high-quality video recording won't be necessary anymore, except for nostalgic reasons to keep the look of real video rather than following the trend of virtualized reality.

Over time, not even a camera might be required anymore. AI could create gestures and motion based on some initial video sessions to train virtual avatars. Another device like a smartwatch could transfer motion information or the mood of our voice would translate into face mimics. And of course, your voice needs optimization too as we already highlighted in another article.