Analysis of Fake-Klitschko Video Conferences

After the unprofessional deepfake attempt on Ukraine's president Volodymyr Zelensky, a fake version of Kyiv's major Vitali Klitschko duped European politicians and officials. But was it also a deepfake?

Background

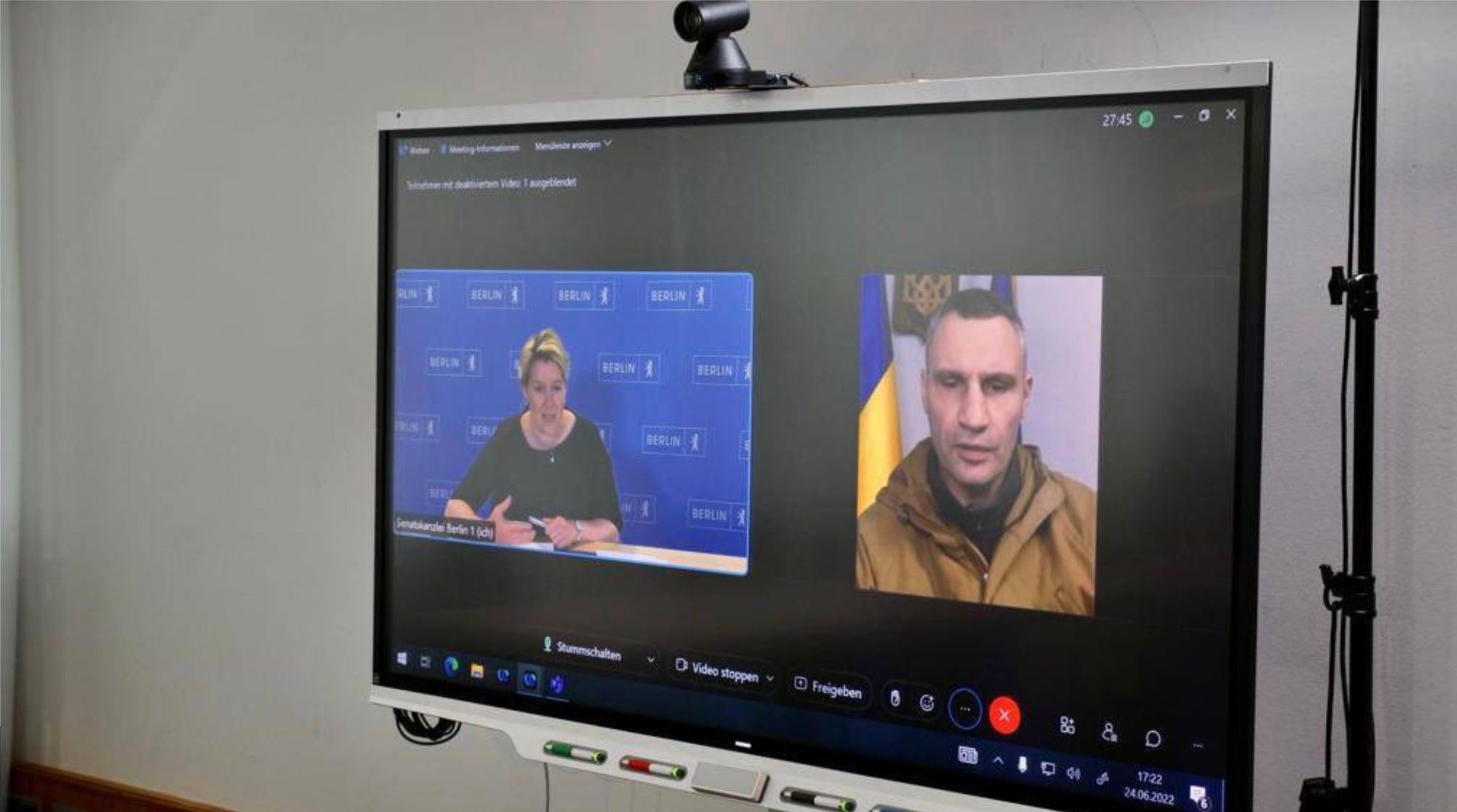

About 3 months ago, a pre-produced deepfake of president Zelensky was uploaded to a compromised Ukrainian news website. The infiltration of the website looked like the work of professional hackers but the quality of the uploaded deepfake was amateurish. In the week of June-20 this year, a real looking Vitali Klitschko joined three conference calls with the majors of Berlin, Vienna and Madrid. It appeared that it was not the real Vitali Klitschko showing in the videos calls. The incident was quickly called to be a deepfake. Let's take a closer look.

In mid 2022, the standard procedure for deepfake creations looked like this:

- Collecting sufficient audiovisual reference content of the deepfake target

- Training of AI software with collected reference content

- Selecting a target video to superimpose the deepfake head and face

- Tweaking parameters based on test runs until satisfied with final result

- Additional video compositing in commercial software might be required

The quality of the deepfake output depends on the quality of collected content and the experience of the person who operates the AI software. The process is file based with reference files as source and and a deepfake file as result.

Creating a deepfake as a live video stream in good quality didn't work well so far. However, the technology evolves fast. Over the last months, we recognized increased activities in the deepfake community to improve the creation of live deepfakes. The training part is still required for live deepfakes. The main difference is that a live stream replaces the target video file to superimpose the deepfake.

On June 28th, the FBI released a public announcement warning about an increase of live deepfakes in job interviews.

FBI warning about live deepfakes

Limitations

Deepfake technology in 2022 still has limitations:

- Complex hair structures like long curly hair can be very challenging for AI algorithms resulting in image distortions

- Glasses can cause similar problems depending the design, reflections, viewing angle and other factors

- Voice only works in English or English with foreign accents

There are ways to overcome and workaround the limitations. For example, an actor with similar hair can be recorded in a studio. The deepfake will be only applied on the face while the hair is from a real actor. Hair and make-up designers are needed to replicate characteristic hairstyles. In 2022, the original idea of a quick AI deepfake creation on a PC can quickly turn into a complex movie-like production where human professionals work around the limitations of AI.

As we described in another article, voice deepfakes (in 2022) could have been even more challenging to create than video. Realistic voice deepfakes only work in English. The latest technology (in 2022) supported voice deepfakes in English with foreign accents. Vgency demonstrated this ability for a French enterprise customer to produce the voice of a CxO in English with French accent. The deepfake voice sounded as real as the real target person. We had to use specific text scripts to train the AI together with the target person who contributed by reading the script and granting permission to record and use it. A simple alternative in many deepfake productions are voice artists to mimic the original voice.

So, what about the fake-Klitschko in the video conference, was it a deepfake?

Doubts

German investigative journalist Daniel Laufer raises valid questions if the fake-Klitschko was indeed a deepfake.

This sounds a lot like this incident, back then it wasn’t an actual deep fake and yet it was at first suspected to have been one. European mayors and an EU commissioner received video calls allegedly from the Kyiv mayor, several fell for it: https://t.co/PemYyqjzzz

— Daniel Laufer (@DanielLaufer) May 24, 2023

It turned out…

He found the source video on YouTube and was able to identify still images of the video conference that match the same frames in the YouTube version. He argued that a deepfake would have altered the video frames in ways that they would not match the YouTube version anymore. He concluded that several pre-produced video sequences could have been mixed as live stream into the video conference feeds. A deepfake might not have happened on the video and a voice impersonator might have imitated the original voice.

Klitschko interview used as source for conference call fake

Daniel Laufer provided an excellent analysis without being able to review a recording of the actual video conference. We tend to agree that a live deepfake would not have been optimal. But it would also not have been impossible based on the following conditions:

- There is plenty of public image content available to train the AI software

- There is plenty of public audio content available in English language

- Vitali Klitschko has short hair with little complexity

- Vitali Klitschko doesn't wear glasses

- Only one camera is used

- Camera and image are very static

- It's possible to just superimpose deepfake based lips and mouth

- Deepfake errors are less noticeable in low video conferencing quality

An older alternative to deepfake is «Face Reenactment» where expressions of lips, mouth, jaw, eyebrows and forehead follow the webcam input of a source actor. This older technique is not AI based and works in real-time on normal commodity PCs.

Technically, it is not difficult to create a deepfake based on the specific Klitschko interview on YouTube by just replacing lips and mouth and keeping the rest of the video unaltered including all head and body movements. However, facial reenactment in combination with a voice impersonator can do the same in real-time with much less technical requirements. It's plausible that this was a so called «cheap fake».

Equally important, such cyberattacks on video conferences are possible with our without infiltrating a deepfake.

Missing Security Measures

Why was it so easy to arrange video conference calls under a prominent fake identity with the majors of three European capital cities?

It's shocking to see how local officials don't pay enough attention to high IT security standards. Details about the preparation of the conference calls have not been published but it's reasonable to assume that a typical phishing scheme has been used. Video conferences are arranged via email invites, so the emails and email domains must have appeared real in the eyes of all three major offices. Official pictures of the video conferences in Berlin and Vienna show that the video calls took place via Cisco Webex. The Vienna office used a Cisco Webex Room 70 device.

With video communication and home office taking off during the COVID pandemic, security breaches started to skyrocket. Home office and video communication can help to be more productive and to better manage work-life balance. What is lacking is awareness about online and cyber security in every aspect of the digital lifestyle. Naivety and carelessness are a problem since years with fake news twisting people's minds in social media. The fake-Klitschko appearing in an official video conference is a new level, fooling public officials who normally warn their voters about fake news.

Officials need to learn from this incident and better understand the risks of manipulation. Official communication needs to be verifiable.

Regulations

Finally, video conferencing providers didn't prioritize deepfake protection in their solutions. In 2022, there was not a single mechanism available in market leading solutions from Microsoft, Zoom or Cisco that helped preventing deepfakes in official video or audio conferencing. The opposite is true: Virtual backgrounds and low video (and audio) quality make it easier for deepfake attackers. Even if the fake-Klitschko was not a deepfake, the video clearly didn't come from a real camera.

Deepfake protection needs to be a requirement for digital communication. Protection features need to be part of every virtual communication solution and service. The hype around the Metaverse needs to take a break. Tech companies need to acknowledge the risks the Metaverse introduces. We cannot be overloaded by virtual avatars, virtual meeting rooms, virtual backgrounds, face and voice enhancements, and AI features in general, without keeping control in the real world. Reality needs to be verifiable. Also in the Metaverse.