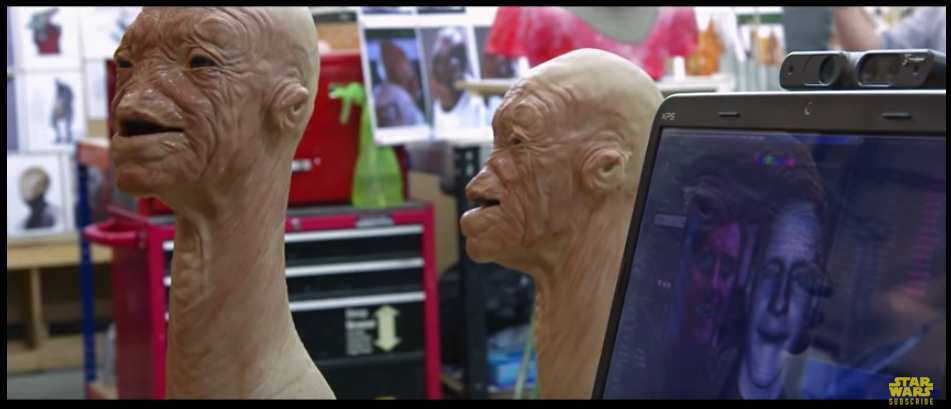

How to Regulate Deepfake

Regulation of AI is a trend and deepfake as a subset of Generative AI receives special attention.

Passing new laws that follow technology trends is already a challenge for legislators. Keeping up with the dynamics of AI is an even greater task. Vgency is in agreement with those voices that point out how challenging it is to keep up with AI.

Vgency is also in agreement with Microsoft president Brad Smith who stated that Deepfakes are one of the biggest concerns of AI. While we are in agreement, let's also look at priorities: First came virtual backgrounds to Microsoft Teams, which is some sort of deepfake as we already wrote end of 2021. End of May 2023, Microsoft announced the availability of avatars for Microsoft Teams. Those virtualization features are not necessarily harmful but there is potential of misuse if a person behind an avatar or deepfake is using fake identities.

The idea to flag AI generated content doesn't solve the problem of fake profiles. Vgency believes that people want to use avatars and even deepfake versions of themselves. There are good and useful use cases for it. Flagging those as AI-generated makes sense and we support this idea. However, we also need to implement proper verification to only allow officially identified people behind avatars and deepfakes they are authorized to use.

Apple introduced virtual avatars after they acquired Zurich based startup Faceshift in 2015. We are tracking Apple's progress with great interest, not only because Vgency is also based in the Zurich area, but because Apple owns complementary solutions that can be used for verification: Face ID and iCloud.

Face ID does not only unlock your iPhone. It scans the real face of the user and creates an image and depth data based profile for a specific user account. Obviously, when creating an avatar on an Apple device, users would want to use those called Memojis with Apple communication tools such as Messages or FaceTime. This would then connect the Memoji with the user's Apple ID that is part of the user's iCloud account. Face ID + Apple ID + Memoji would allow the creation of a verified avatar.

There are still limitations currently. One example is that Face ID was not able to distinguish identical twins. Such edge cases will likely get fixed with improved sensor and processing technology. Apple offers a promising ecosystem with high security to potentially master the challenge of verified avatars. On the other had, the ecosystem is vendor locked and closed IP.

Open Source by Law

AI develops so quickly that it's difficult to keep up. We already explained in our first article that detecting deepfakes with AI based methods is a cat and mouse game because AI based deepfake detections are being trained with available deepfake data. The latest deepfake technology would always be one step ahead deepfake detection. It's useless to think about strict deepfake regulations like in China if the latest deepfake technology creates content that cannot be detected as deepfake. Flagging of AI generated content like required in the European Union is only applicable to established platforms and solutions. It won't prevent misuse of AI by cybercriminals who leverage unregulated open source based applications on a PC.

If flagging of all AI generated content is the goal then reliable and up-to-date detection mechanisms would be required. Developers of such detection solutions need to understand the algorithms and patterns that create deepfakes in order to train their AI based detection. The easiest would be if relevant AI technology would be open source and accessible. And if it's not, it would need to be legal to reverse-engineer powerful AI technology and make the results open source. This also needs to be regulated to protect IP of useful AI.

The collective intelligence of an open source community can empower more people to study and investigate AI. This might help to have deepfake detection keep up with the fast pace. Allowing reverse engineering of powerful AI might also reveal flaws and weaknesses that otherwise would remain undetected. The human-factor is not to be underestimated.